WhiteBalance

| Abstract | |

|---|---|

| Author | SomeJoe |

| Version | v1.0 |

| Download | WhiteBalance100.zip |

| Category | Levels and Chroma |

| License | Closed source |

| Discussion | Doom9 Thread |

Contents |

Description

Designed to correct the white balance of a clip with a large degree of control and accuracy over other methods of correcting white balance.

Requirements

What is white balance?

White balance is a measure of whether true white objects appear white in the video. Often, white balance is a setting available on the video camera. Consumer cameras generally have automatic white balance, or sometimes have an indoor/outdoor white balance adjustment. Professional cameras have a manual white balance, which can be adjusted in many steps to give a correct white balance to the video.

Why is white balance adjustment required?

Cameras have to assume that the scene is lit by a light source with a certain character. For instance, a true white object when lit outdoors reflects the outdoor light, giving the camera a certain intensity of red, green, and blue wavelengths. But the same white object, when lit by indoor lighting, reflects the indoor light, which has a different ratio of red, green, and blue wavelengths. The camera must compensate for the difference in the light source to write a 255/255/255 digital value (white) onto it's recording tape. If there is no compensation, a true white object will be recorded as having a slight color on tape. The most common example occurs when a camera is set for standard or automatic white balance (which is calculated for outdoor/daylight intensities), but is then used to film indoors. The indoor lighting does not have as much blue in it, so white objects are recorded on tape with a yellowish cast.

This filter can compensate for video that was filmed with incorrect white balance.

Why write another filter to do this when there are other methods?

Not satisfied with other methods of adjusting white balance, because they come with other shortcomings that are not easily overcome.

Some other methods of white balancing video fall into one of two categories:

- 1.)

- "Levels" adjustment filters, such as the Adobe Photoshop "Auto Levels" filter, or some implementations of AVISynth's RGBAdjust() filter.

- These filters work in RGB color space, and attempt to make white objects equal to 255/255/255, and black objects equal to 0/0/0. Given a reference to a white object and a black object, they then scale the red, green, and blue values throughout the image.

- This method has the following problems:

- a.) You need a pure white and pure black object in your video so that you can tell the filter what RGB values are at the extreme. This may or may not be possible, as you may not have pure white and black objects available in the video.

- a.) You need a pure white and pure black object in your video so that you can tell the filter what RGB values are at the extreme. This may or may not be possible, as you may not have pure white and black objects available in the video.

- b.) Applying the levels algorithm like this will change the contrast and brightness settings overall. This may make the video too dark or too light.

- b.) Applying the levels algorithm like this will change the contrast and brightness settings overall. This may make the video too dark or too light.

- c.) If there are colored objects that are brighter than your brightest white (for instance a candle flame), or colored objects darker than your darkest gray (for instance, a navy blue fabric), luminance clipping will occur which removes shadow and highlight detail.

- c.) If there are colored objects that are brighter than your brightest white (for instance a candle flame), or colored objects darker than your darkest gray (for instance, a navy blue fabric), luminance clipping will occur which removes shadow and highlight detail.

- d.) The Levels adjustment algorithm is only accurate at the white and black points. If the camera's red/green/blue intensity response was somewhat non-linear, midtones may not be white balanced properly even after the algorithm is applied.

- d.) The Levels adjustment algorithm is only accurate at the white and black points. If the camera's red/green/blue intensity response was somewhat non-linear, midtones may not be white balanced properly even after the algorithm is applied.

- 2.)

- YUV static-adjustment filters, such as using AVISynth's ColorYUV() filter.

- This method works in YUV color space, and applies an offset to the U and V values throughout a clip.

- YUV static-adjustment filters, such as using AVISynth's ColorYUV() filter.

- This works a bit better than the Levels algorithm, but:

- This works a bit better than the Levels algorithm, but:

- a.) The camera's color response may not have been constant throughout the luminance range. In other words, the camera may have recorded blacks fairly accurately, but only shifted towards yellow on the whites. By applying a constant offset to all U and V values, whites are corrected from yellow to white, but at the same time, blacks are shifted from black to blue.

- a.) The camera's color response may not have been constant throughout the luminance range. In other words, the camera may have recorded blacks fairly accurately, but only shifted towards yellow on the whites. By applying a constant offset to all U and V values, whites are corrected from yellow to white, but at the same time, blacks are shifted from black to blue.

- b.) Colors near the extremes of saturation will either lose some saturation due to the shift, or gradients near the edge of saturation will get clipped, possibly resulting in some visible banding.

- b.) Colors near the extremes of saturation will either lose some saturation due to the shift, or gradients near the edge of saturation will get clipped, possibly resulting in some visible banding.

This White Balance filter takes these problems into account, and is able to adjust camera white to true white throughout the luminance range.

How does the White Balance filter work?

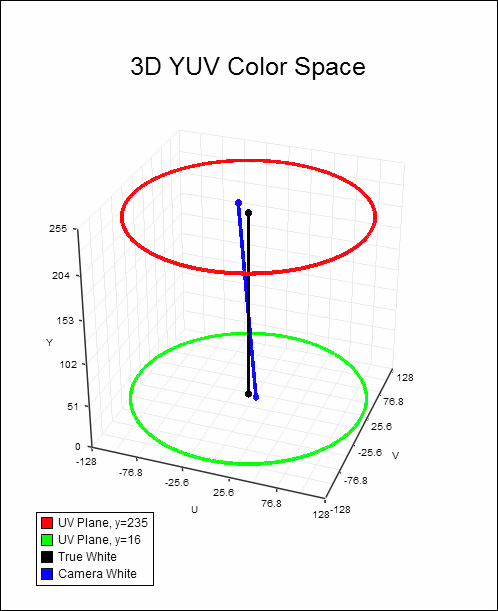

White Balance filter works internally in YUV color space. Imagine the following 3D diagram of YUV color space:

In this hypothetical diagram, the camera's white balance was incorrect, resulting in true white objects shifted towards yellow (the blue dot in the red plane).

At the same time, black objects were shifted towards blue (blue dot in the green plane). Thus the "white axis" of the camera is diagonal within the YUV

cylindrical color space, and never even intersects the black "true white" axis. The White Balance filter intelligently scales U and V values within each luminance

plane, avoiding decreasing saturation where a color was fully saturated, and avoiding clipping saturated colors at the opposite extreme. Once the U and V values are

scaled, the camera's white axis lines up with the true white axis, making all whites, grays, and blacks perfectly neutral throughout all luminance planes.

Syntax and Parameters

- WhiteBalance (clip, int "r1", int "g1", int "b1", int "y1", int "u1", int "v1", int "r2", int "g2", int "b2", int "y2", int "u2", int "v2", bool "interlaced", bool "split")

- int r1 =

- int g1 =

- int b1 =

- These are the red, green, and blue values of a point on the camera's white axis.

- In other words, these are the red, green, and blue values of a white or near-white object in the original video.

- Range: 0 to 255

- int r1 =

- int y1 =

- int u1 =

- int v1 =

- Optionally, if you don't want to specify red, green, and blue values of your white or near-white object, you can specify a YUV value of the white or near- white object.

- Range: 16 to 235 for y1

- Range: 16 to 240 for u1 and v1

- Optionally, if you don't want to specify red, green, and blue values of your white or near-white object, you can specify a YUV value of the white or near- white object.

- int y1 =

- Either r1, g1, b1 OR y1, u1, v1 must be given.

- These values are telling the White Balance filter of one point on the camera's white axis line (the blue line in the 3D YUV graph).

- If both r1, g1, b1 AND y1, u1, v1 are given, the filter uses the r1, g1, b1 values, and y1, u1, v1 are not used.

- int r2 =

- int g2 =

- int b2 =

- Optionally, these are the red, green, and blue values of another point on the camera's white axis.

- In other words, these are the red, green, and blue values of a black or near-black object in the original video.

- Range: 0 to 255

- int r2 =

- int y2 =

- int u2 =

- int v2 =

- Optionally, if you don't want to specify red, green, and blue values of your black or near-black object, you can specify a YUV value of the black or near-black object.

- Range: 16 to 235 for y2

- Range: 16 to 240 for u2 and v2

- Optionally, if you don't want to specify red, green, and blue values of your black or near-black object, you can specify a YUV value of the black or near-black object.

- int y2 =

- Both r2, g2, b2 AND y2, u2, v2 are optional.

- If they are not specified, the White Balance filter assumes that black objects were filmed correctly by the camera

- (i.e. the blue white axis of the camera coincides with the true white axis at y=16, i.e. black luminance plane).

- bool interlaced = false

- This parameter is a boolean value (i.e. true or false), and tells the filter whether the input video is interlaced or not.

- This parameter is optional, and defaults to false. It doesn't need to be specified unless the input color space is YV12.

- (see YV12 color space explanation later in the document for details).

- bool interlaced = false

- bool split = false

- This parameter is a boolean value (i.e. true or false), and is used for troubleshooting.

- If set to true, the White Balance filter will only white balance the right half of your video frame (the left half will not be processed).

- This is so that you can look at a before/after split to see what the White Balance filter has done to the video. This parameter is optional, default is false.

- bool split = false

Examples

1. Suppose I have a clip that has incorrect white balance. I export a frame from it that has a near-white object and a near-black object

(i.e. I know that the objects are supposed to be nearly white and nearly black. The camera filmed both of the objects with an orange-ish tint.)

I load that frame into the graphics application of my choice and look at the RGB values of each object.

The near-white object has a coloration of RGB 176/153/132. The near-black object has a coloration of RGB 56/40/32.

I white balance this clip as follows:

WhiteBalance(r1=176, g1=153, b1=132, r2=56, g2=40, b2=32)

2. Supposed I have another clip with incorrect white balance.

It looks like the camera was correct with black values, but white values are shifted towards blue because the scene was filmed outdoors under an overcast sky.

I export a frame of the video, and my graphics application says that a near white object has a coloration of RGB 210/215/229.

In addition, I want to see a before/after split of this video to look at the difference. Further, this is interlaced video, and I'm using YV12 color space.

I white balance this clip as follows:

WhiteBalance(r1=210, g1=215, 61=229, interlaced=true, split=true)

Color Space Considerations

The White Balance filter works in these color spaces (RGB24, RGB32, YUY2, and YV12).

Some notes for each color space:

RGB32 and RGB24

- Because the translation for each pixel to a corrected color involves the luminance value, the White Balance filter is most accurate in RGB24 and RGB32 color spaces,

- because the chrominance information is not downsampled. Each pixel's luminance value is individually used to translate the pixel's color to the corrected value.

- Because the translation matrix works in YUV color space internally, using RGB24 or RGB32 color space from your AVISynth script results in a substantial speed penalty.

- The filter's speed is about half of it's potential maximum when working in RGB24 or RGB32 color space.

- WhiteBalance uses 32-bit integer implementations of RGB<->YUV conversions, so they run reasonably fast, and according to my spreadsheets are as accurate as the

- floating point implementations.

YUY2

- This color space is less accurate than RGB because chrominance information is downsampled by half resolution in the horizontal direction (4:2:2 downsampling).

- For every two pixels, there is only one color. To translate that color, the filter uses the average luminance of the two pixels to determine which luminance

- plane to use to apply the color correction.

- YUY2 runs at near the filter's maximum theoretical speed.

YV12

- This color space is less accurate than both RGB and YUY2 color spaces because chrominance information is downsampled by half resolution in BOTH the horizontal

- and vertical directions (4:2:0 downsampling). For every 4 pixels, there is only one color.

- To translate that color, the filter uses the average luminance of the 4 pixels to determine which luminance plane to use to apply the color correction.

- The filter takes into account which lines to use for luminance averaging based on the interlaced parameter.

- With non-interlaced footage, the chrominance samples come from adjacent lines, so the filter uses adjacent line's luminance values when computing the 4-pixel average.

- With interlaced footage, the chrominance samples come from adjacent lines within a single field, so the filter needs to use adjacent line's luminance values from the

- same field to compute the 4-pixel average. With interlaced=true, the filter does this.

- YV12 runs at near the filter's maximum theoretical speed.

- Note that the interlaced parameter should be set to true only under the following conditions:

- 1. The input color space to the filter is YV12

- 2. The footage is interlaced video.

- 3. The video is frame-based (i.e. you have NOT called SeparateFields() before you call WhiteBalance(). )

- In all other conditions, you may omit the interlaced parameter.

Changelog

Version Date Changes

v1.0 2006/01/22 - Initial release

Links

- Doom9 Forum - WhiteBalance discussion.

- Archive.org - Archived download.

Back to External Filters ←